Welcome to Snapshots of Social Science - a quarterly newsletter where we bring you recent developments in the diverse fields of social science. Learn more about us here.

This month is the second part of a series looking at the evolution of artificial intelligence, from Cybernetics to ChatGPT. Inspired by the popularity of ChatGPT, this newsletter dives into great moments in the history of how humans perceived intelligence in computers. The first part of the series explored Macy conferences and the field of cybernetics. In this part, we present some moments in the second half of the 20th century, which laid the building blocks of artificial intelligence as we know it today. This is in no way exhaustive, and the field is so vast that we have picked just a few moments in a big trajectory of the evolution of modern artificial intelligence.

Before we begin, please consider subscribing to ICJS Social Science by clicking the button below! This will ensure that each edition of our newsletter makes its way to you every month. We would truly appreciate it if you spread the word about us as well - our impact grows with each additional reader.

A short recap

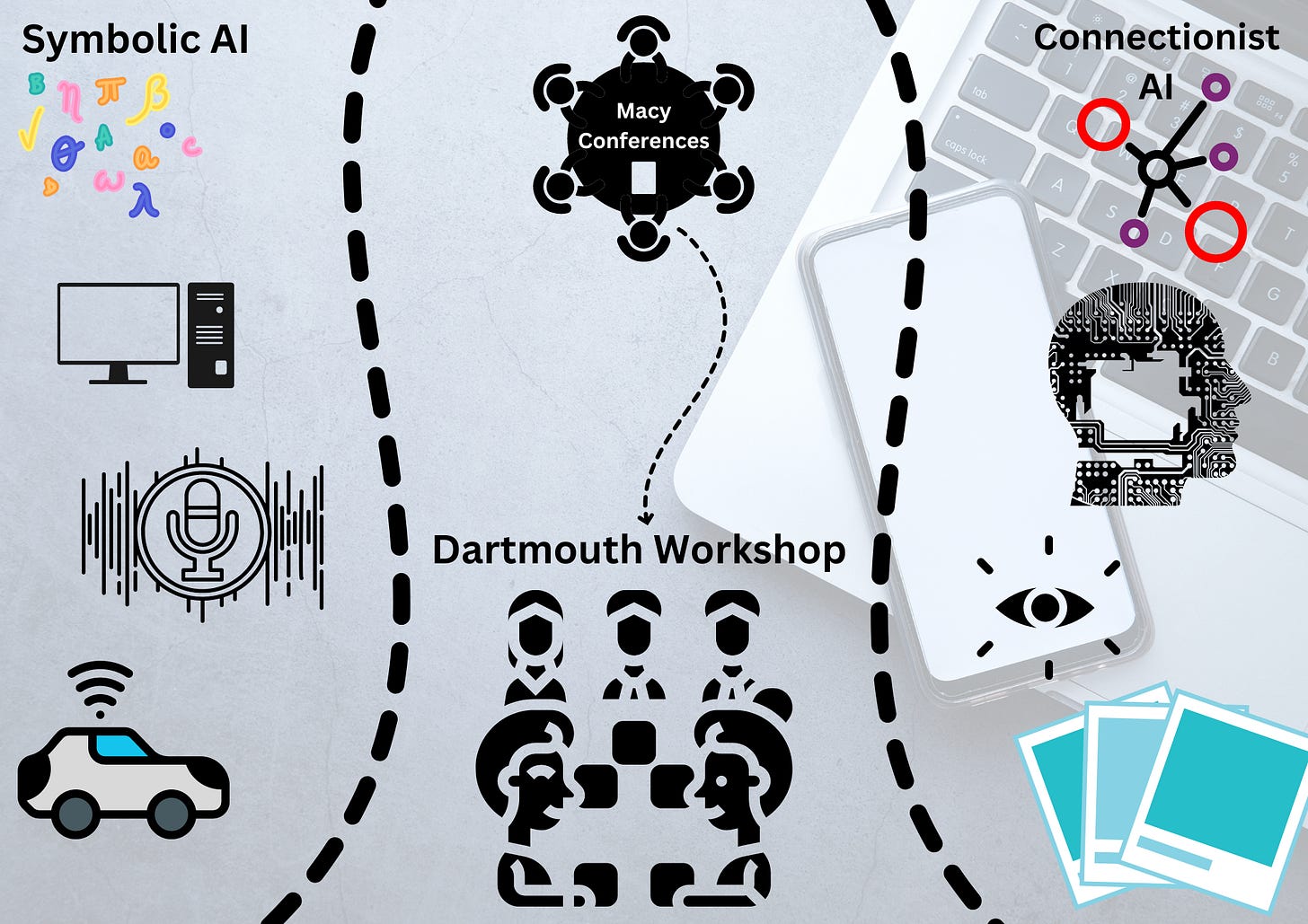

In part 1 of this series, we explored the Macy conferences, where a new area of study called cybernetics was taking shape. These conferences brought together experts from diverse fields like mathematics, biology, physics, neuroscience, philosophy, linguistics, and sociology. We also saw how connections between biology and computer science were forming—like neurons, the DNA, self-replicating machines, and the transfer of information—which laid the foundation for cybernetics. In the background of all of this came the Dartmouth 1956 workshop.

Research Map

Dartmouth 1956 - AI workshop

In August 1955, John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon (remember him from Information theory?) wrote a proposal to pursue a two-month long research project on what McCarthy called artificial intelligence. Their aim was to study how any form of intelligence can be described in a way that a computer can mimic. They explained seven aspects of this:

“Automatic Computers” - discovering how to write better programs to make full use of computer speed and memory restrictions.

“How Can a Computer be Programmed to Use a Language” - studying how computers can use language like humans do: combine words to form sentences using simple grammar rules.

“Neuron Nets” - how a network of neurons can be used to represent a concept.

“Theory of the Size of a Calculation” - developing efficient means of calculations.

“Self-improvement” - can a machine improve itself?

“Abstractions” - studying how machines can represent abstractions from data.

“Randomness and Creativity” - the idea of how randomness, when done with intuition, generates creativity.

The Dartmouth workshop brought together some of the pioneers and visionaries of AI, such as John McCarthy, Marvin Minsky, Claude Shannon, Herbert Simon, and Allen Newell. As you might have recollected, many attendees of this workshop (as mentioned in the notes of Ray Solomonoff - one of the attendees, and a pioneer of Machine Learning), were also the attendees of the Macy conferences.

But what was the major development of this workshop?

Symbolic AI - the new approach

The Dartmouth workshop heavily emphasized Symbolic artificial intelligence. In very simple terms, the logical manipulation of symbols—or language-like representations of objects and relationships—gives the computer its intelligence.

Symbolic AI has important modern-day applications. Expert systems like medical diagnosis systems use symbols and a set of rules to deduce what illness someone may have based on the symptoms. Autonomous cars use symbols around them to deduce the next course of action. Also, natural language processing (used in digital assistants like Alexa and Siri) uses logical structures and symbol manipulation to give relevant information as output.

Along with these, let us take a look at the applications of symbolic AI back in the day:

Logic Theorist - a ‘thinking machine,’ invented by Allen Newell and Herbert Simon in 1956, that could prove theorems in symbolic logic from a famous mathematical book.

General Problem Solver - a computer program that grew out of the Logic Theorist, except that it closely tried to mimic humans (college students) trying to work out proofs and use the logical procedure that humans did while solving such problems.

ELIZA - a natural language processing program created by Joseph Weizenbaum in 1966, that could converse with a human being on a typewriter. It used simple rules to manipulate the human being’s statements and then generate responses.

SHRDLU - a program for understanding natural language, that was created by Terry Winograd around 1968 to 1970. It could respond to human instructions and do actions in a small ‘world’ that consisted of blocks, interacting with the human at the same time by asking clarifying questions.

MYCIN - an early rule-based medical diagnosis system, developed in 1974, to assist physicians in selecting the proper therapy to tackle bacterial infections.

But…some problems with Symbolic AI

While the hype around Symbolic AI peaked in the 1970s and 1980s, it faced some problems. It worked well for systems that had clear goals, symbols, and worked within a set framework. But what if the computer encountered unprecedented data? Or what if the same problem was presented to it using unknown symbols? Was there any way that the system could learn?

Anything that couldn’t be coded, couldn’t be dealt with by symbolic AI.

…and the Connectionist approaches

An approach that evolved in parallel with symbolic AI was connectionism. It started with the development of a simple neuron by McCulloch and Pitts in 1943 (remember from part 1?) and had been instantiated as a perceptron by Rosenblatt in 1958. The Connectionist approach was based on how the human brain works: interconnected neurons and their activation brought about intelligent actions and outputs. Was there a way that computers could use the same principle to get to human-like intelligence?

There indeed was, and today, connectionist AI is still a dominant approach (think of deep learning and neural networks). But how did the perceptron develop into full-fledged neural networks? And how did it come to dethrone symbolic AI?

After Marvin Minksy and Seymour Aubrey Papert wrote a book about the limitations of the perceptron in 1969, it wasn’t until the 1980s that the perceptron fully developed into a neural network. In 1987, a book called Parallel Distributed Processing was published. It was written by Rumelhart, McClelland, and their group, who argued that computers should be able to process input just as the brain does: simultaneously. If we see an object, our brain is able to simultaneously process the object’s shape, size, speed, etc. just in a fraction of a second. Similarly, computers can consist of a network of neurons, where the input can be broken down into parts, which are processed by different neurons at the same time. A breakthrough in AI, this book, coupled with the development of algorithms like backpropagation, saw connectionism come roaring back to take its place in the AI narrative.

AI Winters and Springs

As both these approaches in Artificial Intelligence evolved, the Artificial Intelligence community came to know of two seasons—Winter and Spring. Winter is a period of reduced funding, where the general interest in AI goes down. An AI Spring is the opposite. This cycle of Winters and Springs began in the 1970s, when many breakthroughs that had originally been promised had not borne fruit. Researchers realized that AI is harder than originally believed. But some small discoveries or inventions led to brief periods of re-interest and government funding. The future of AI looked bleak in the Winters but picked up pace during Springs.

We probably are seeing an AI Spring currently. But more about that next time.

For next time

We will start with modern-day AI, looking at how DeepBlue beat the world chess champion, Gary Kasparov

Some links you may find helpful

History of A.I.: Artificial Intelligence (Infographic) | Live Science

The History of Artificial Intelligence - Science in the News (harvard.edu)

Spread the Word!

Thank you so much for reading! Please share this newsletter with anyone who would be interested. Press the button below to share!